Reimagining Living Ontologies is an innovative and improvisational collaborative data art performance with responsive visuals incorporating scientific and artistic approaches in data visualisation systems. This project utilises biophysical data from the human heart that drive computer-generated audio-visual scenery inside an immersive 360-degree video projection dome located at York University (Toronto, Canada) in Cinema & Media Arts research location BetaSpace. The core research questions and objectives that drive this project are: 1) evaluate current data practices within artistic, scientific and economic realms; 2) identify the practices and challenges that are concerned with biophysical data harnessing and interpretation; 3) develop an artistic rich and innovative data artwork that builds on mutually agreeable data transactions and innovative technologies; 4) propose, exhibit and perform creative knowledge building systems that are embedded in artistic and research-creation domains.

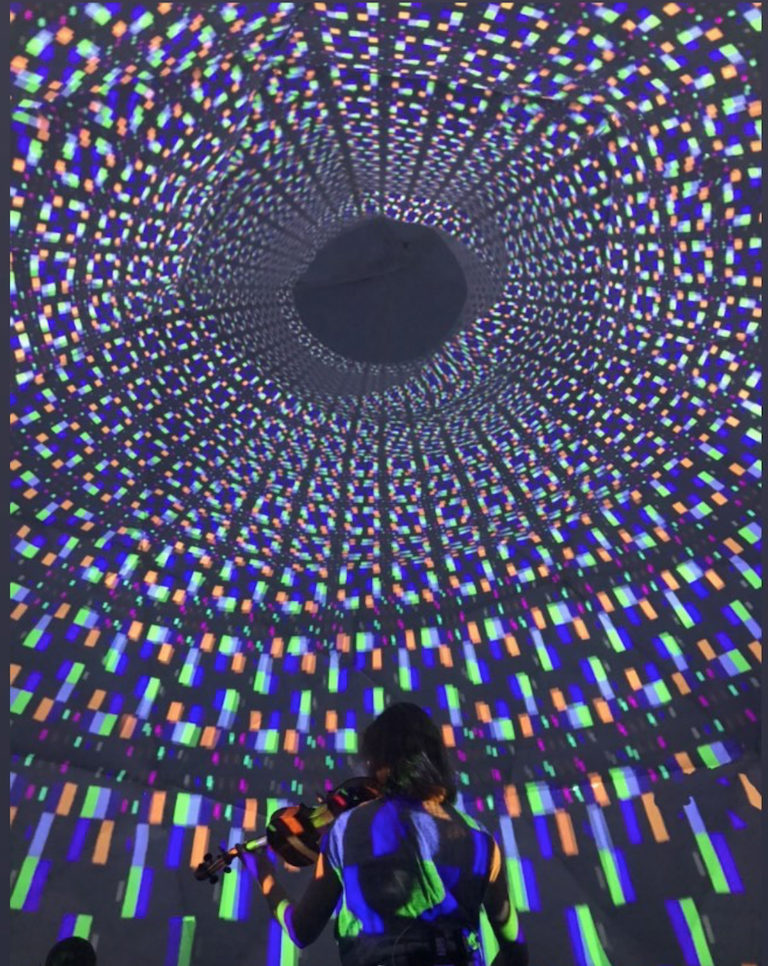

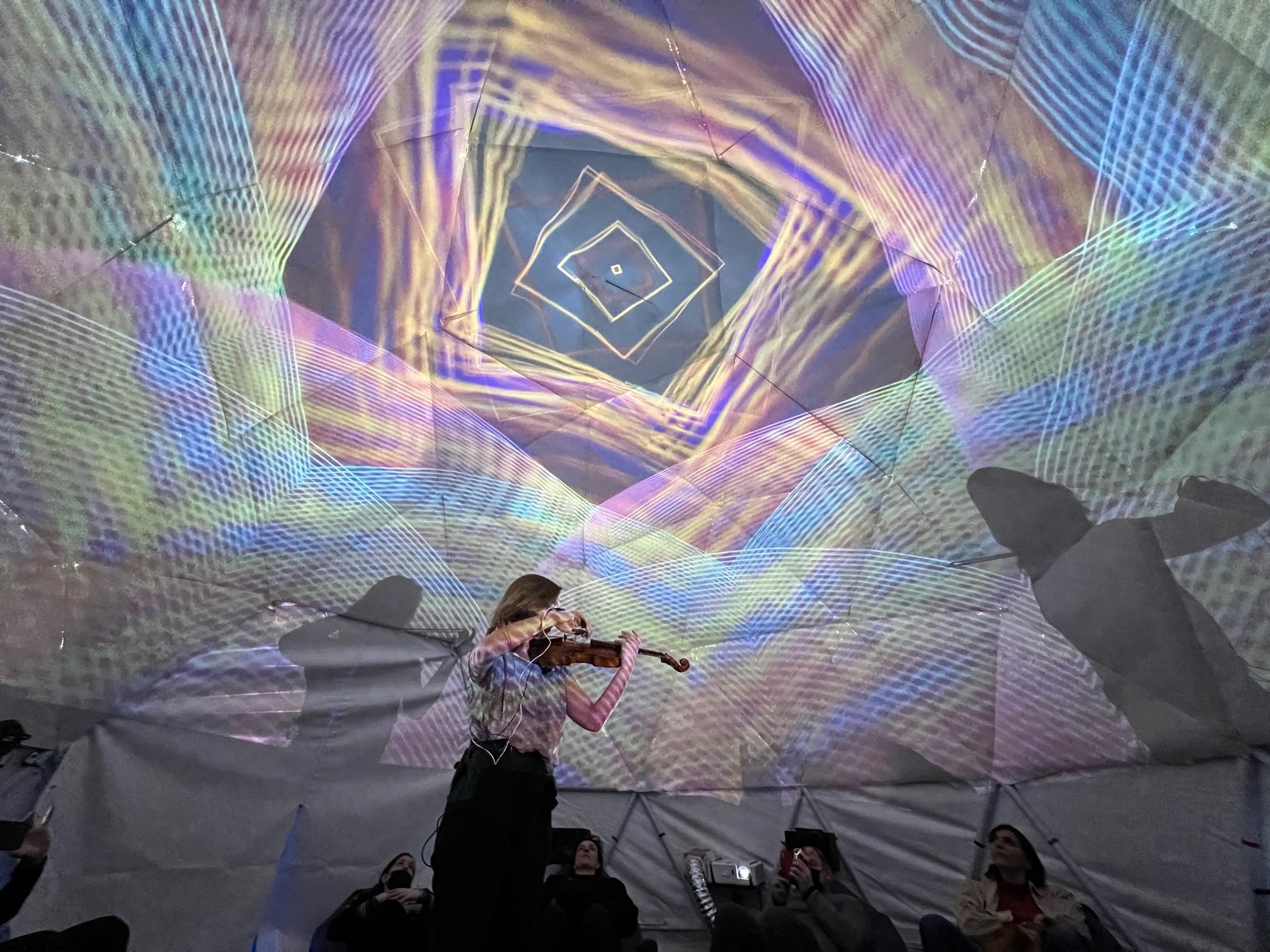

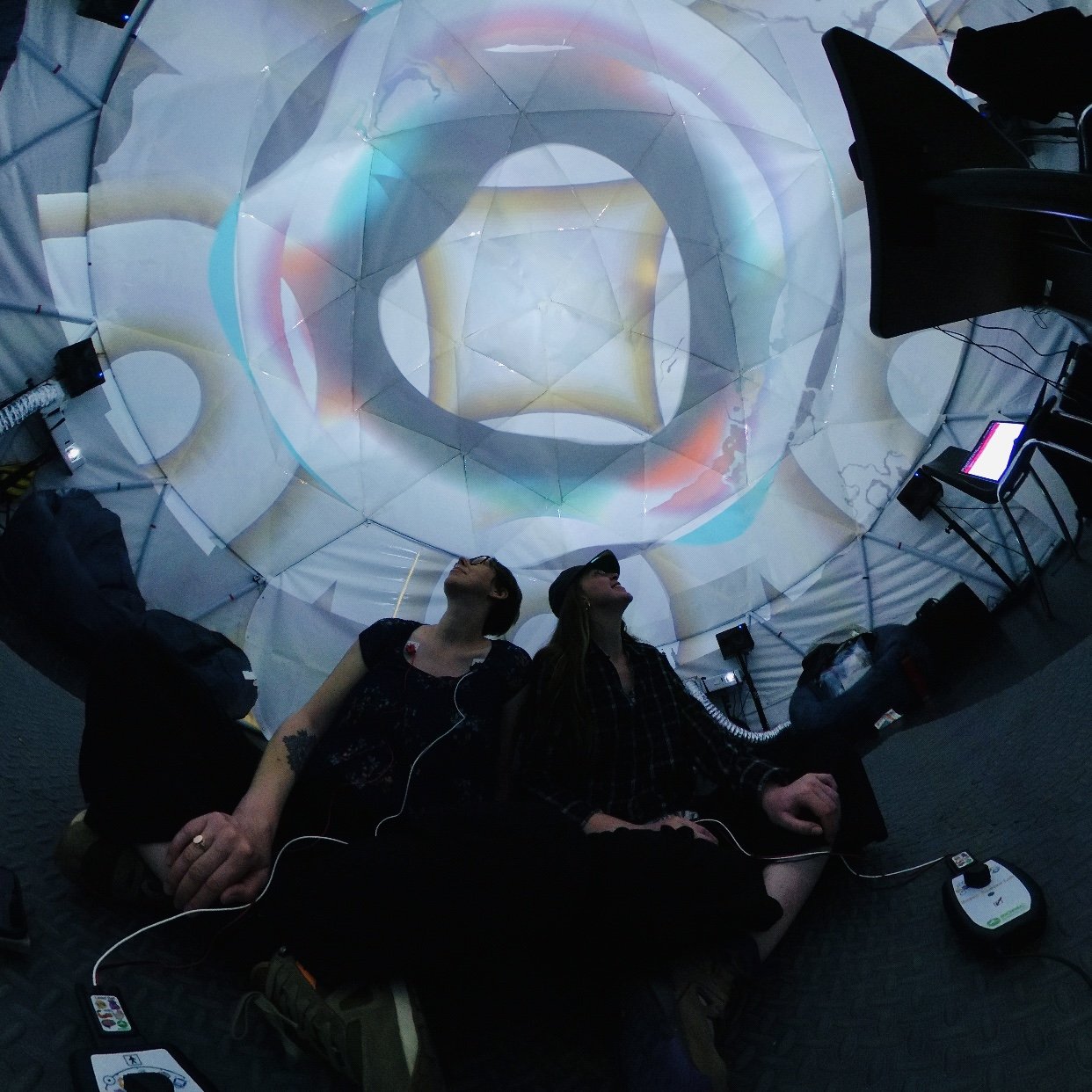

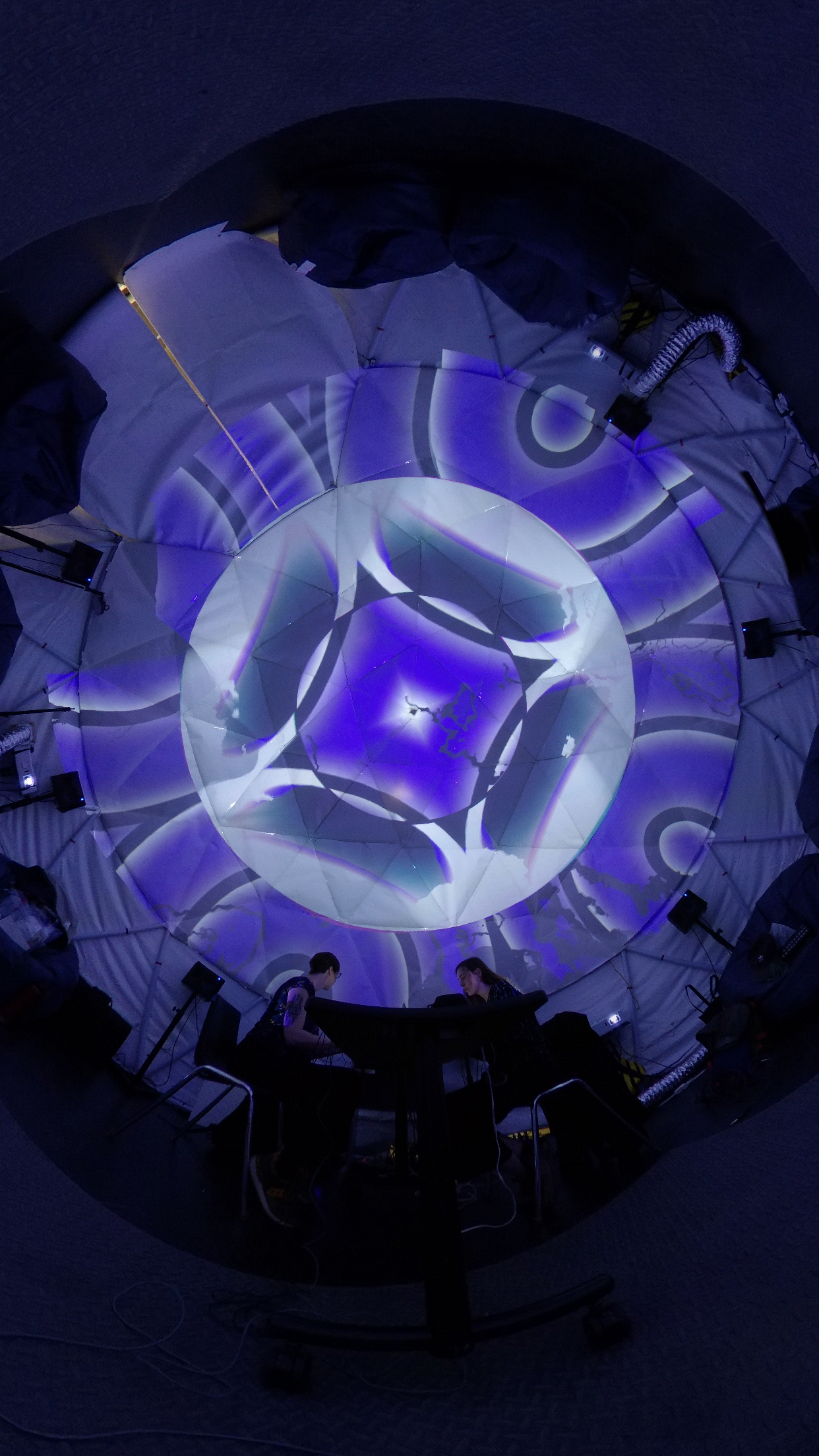

Reimagining Living Ontologies is a live performance delivered by two performers: violinist Dr Amy Hillis and visual artist Ilze Briede [artist alias Kavi], in which biophysical data of the heart gets interpreted in real-time visuals and patterns projected inside a 360-degree video projection dome. The goal for developing this collaborative technological piece was to explore new possibilities in seeing the human body as a complex and artistically expressive mediator of hidden processes happening within and develop a responsive performative system that co-creates with live data. Reimagining Living Ontologies aims to expand the notion of embodiment and data that crosses the boundaries across human and non-human spaces, such as the physical human body, network for data exchanges, digital software tools and finally, a physical performance space that is mediated through the emerging web of connecting data, biofeedback, visuals and sounds. A significant part of developing this prototype was envisioning a functional and responsive audio-visual system to create meaningful connections among performers and audiences.

Keywords.

Biophysical sensing, Electrocardiogram (ECG), heart activity data, real-time performance, generative patterns, data visualisation, immersive projection, and improvisation.

///////////////////////

///////////////////////

The work with biosensing and live coding is further explored in the Bio Immersants Collective which consists of Kavi and Hrysovalanti Maheras [a.k.a. Fereniki]. As a collective whose name is inspired by digital artist Char Davies's piece Osmosis, we are committed to exploring the human sensorium with the help of technology. The immersant, for Davies, is a person surrounded by artificial and mediated environments and, as a result, is experiencing a perceptual shift of their physical self. We follow the same philosophical approach when conceptualising embodied experiences expressed as alternative viewpoints through sensors and technological interventions. With this project, we aim to expand the notion of embodiment and data that crosses the boundaries across human and non-human spaces, such as the human body, network for data exchanges, digital software tools and finally, a physical performance space that is mediated through the emerging web of connecting data, visuals and sounds. By placing the human body at the centre of our work, we use technologies in performance to expand on the possibilities in alternative ways of perceiving, experiencing and knowing. This work is also inspired and informed by data-driven art, cybernetic systems, psychophysics and pre-Socratic philosophical discourses. Like Muriel Cooper’s information landscapes, where data becomes an artistic and fluid body, Kavi and Fereniki co-create an embodied technological performance that expresses biological and artificial systems' behaviour and hidden patterns. Our research-creation work explores the cross-sections between art and science, where bio-physiological measurements trigger movements and changes in generative visualizations and algorithmic audio to evoke a deeper and more meaningful presence.

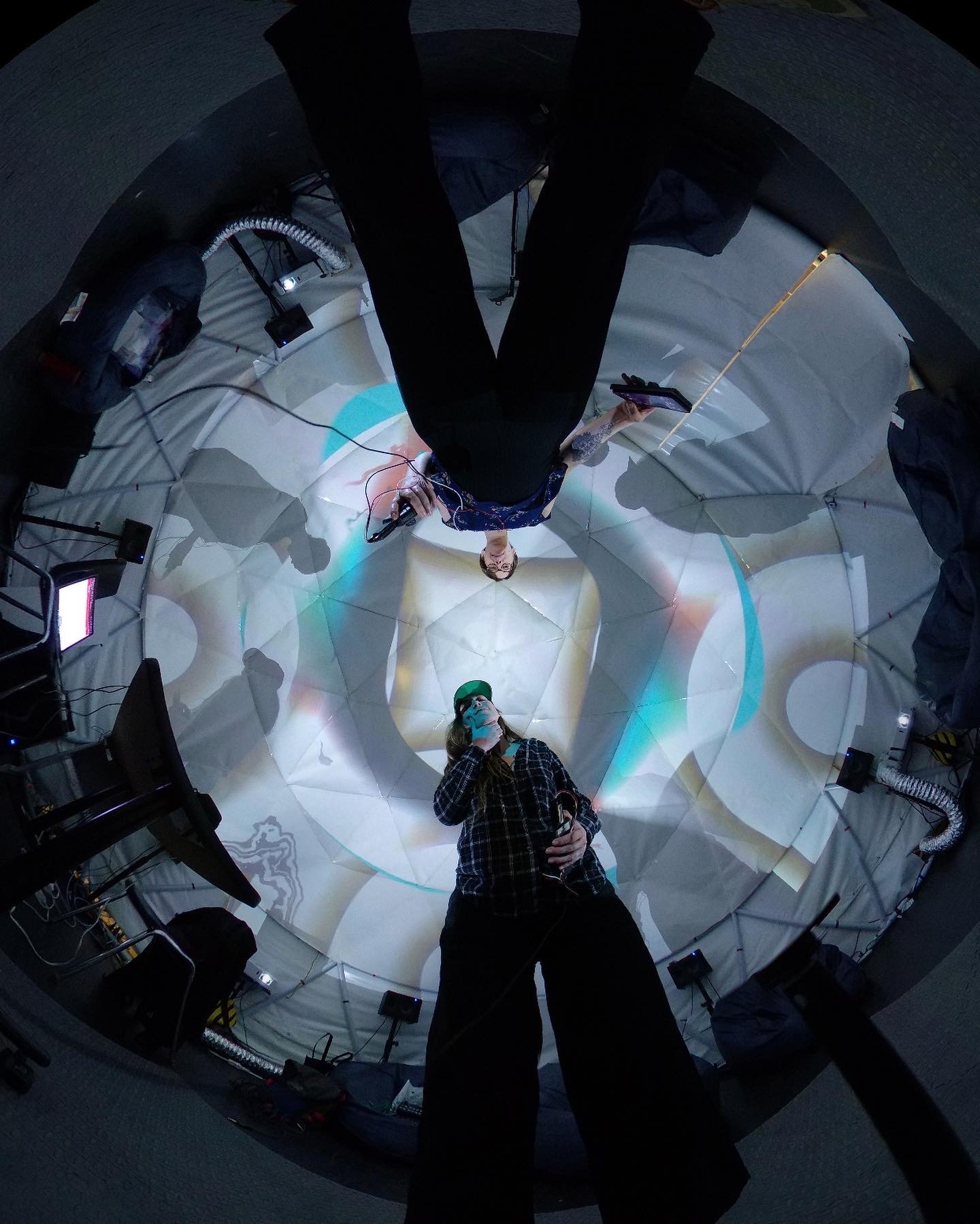

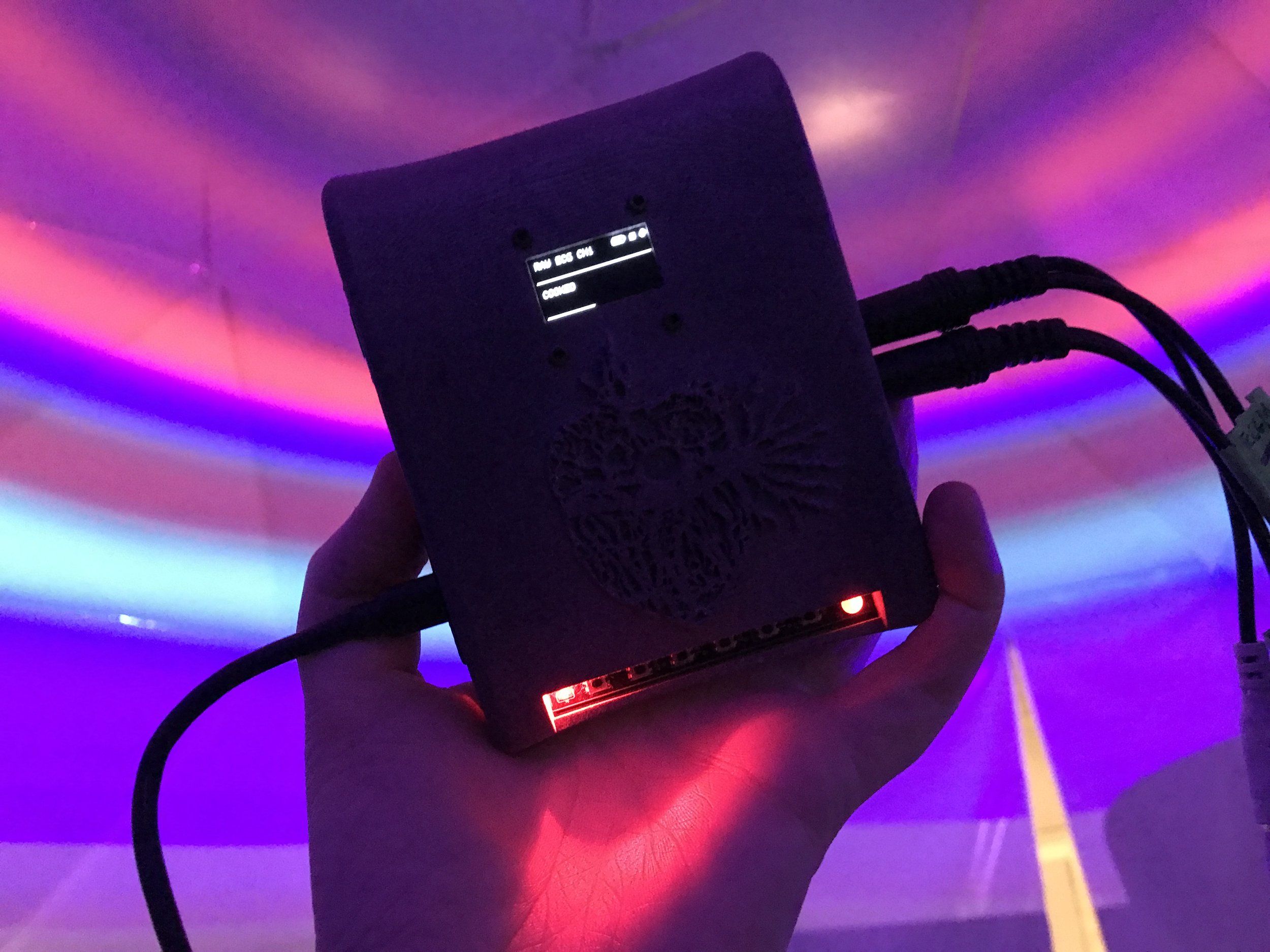

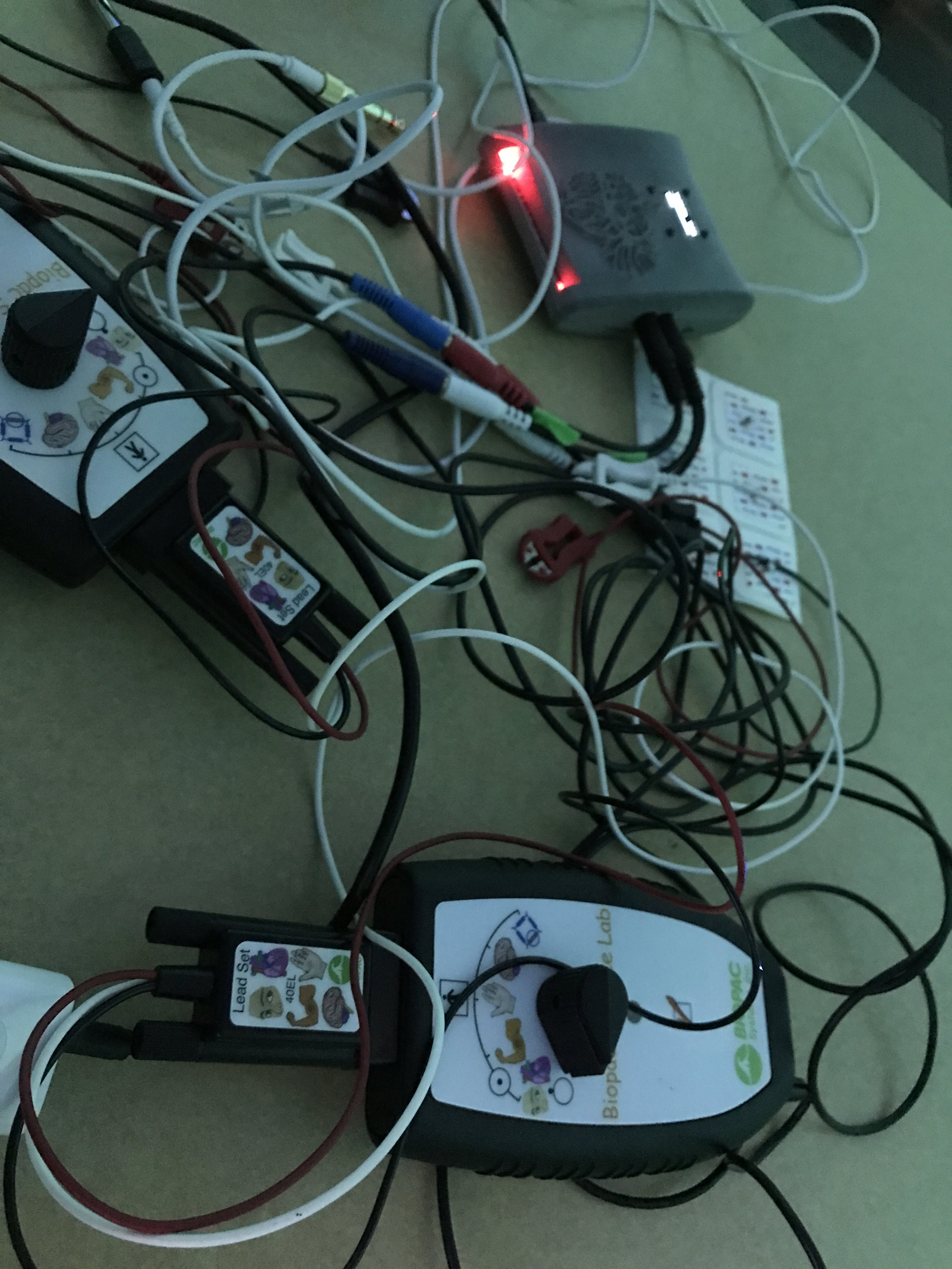

The project’s developmental stages and rehearsals have been possible with generous support from Prof. Taien Ng-Chan, a director of the immersive dome structures situated in the BetaSpace research lab, which is part of Cinema and Media studies at York University. Reimagining Living Ontologies collaborations have been possible with technology developed by scholars and artists Prof. Mark-David Hosale and Alan Macy called Pacis Pak. This technology allows one to read bio-physiological processes such as heart activity, brain waves and muscle tension and communicate it directly to the performer tools that create sound and visuals on the fly.

So far, we have performed this research and live coding performance at the York University Congress. We are also proposing a new performance for the ICLC 2024/Shanghai venue titled Coiled Soma.

Immersive storytelling is an impactful way to connect people and create memorable shared experiences. During the proposed live performance, the biological heart and brain data are gathered from the performers bodies using Pacis Pak hardware and software developed by Prof. Mark-David Hosale and bio-medical designer and artist Alan Macy (https://www.ndstudiolab.com/pacis/pacis-pak). Simultaneously, this data is streamed via a local network and made available for audio and visual generation in real time. Visual landscapes are created through co-creative live coding between the human performer and its living body, allowing hidden yet present bio-physiological processes to alter and surprise the flow of the algorithmic structures. Kavi uses TouchDesigner and Hydra live coding environments that support interactive and immersive content creation and accessing biological heart data in real-time. Hryso uses the Supercollider coding environment to manage data streams to create a real-time audio soundscape that responds to biophysical and visual data. The sound is generated by heart rate variability and respiration data. The audio spatialization and visual immersion design are connected to the idea of reversing real-world acoustic and visual experiences and expressing what otherwise would be impossible to perceive.

Coiled Soma introduces an immersive live coded audiovisual experience that integrates biosensing technologies, enabling the extension of the body into the virtual realm. This innovative approach pushes the boundaries of performing bodies, tapping into internal human processes as a source of creativity and beauty. Rooted in uncertainty and driven by the goal of evoking profound emotions, the performance strives to cultivate transparency and vulnerability via algorithmic interventions. Through collaborative efforts and intimate engagement, the artists aim to forge a deeper connection, emphasizing clear and honest communication in their artistic expression. This commitment goes beyond mere observation, fostering an atmosphere that facilitates a more profound and meaningful connection between the performers and the audience.

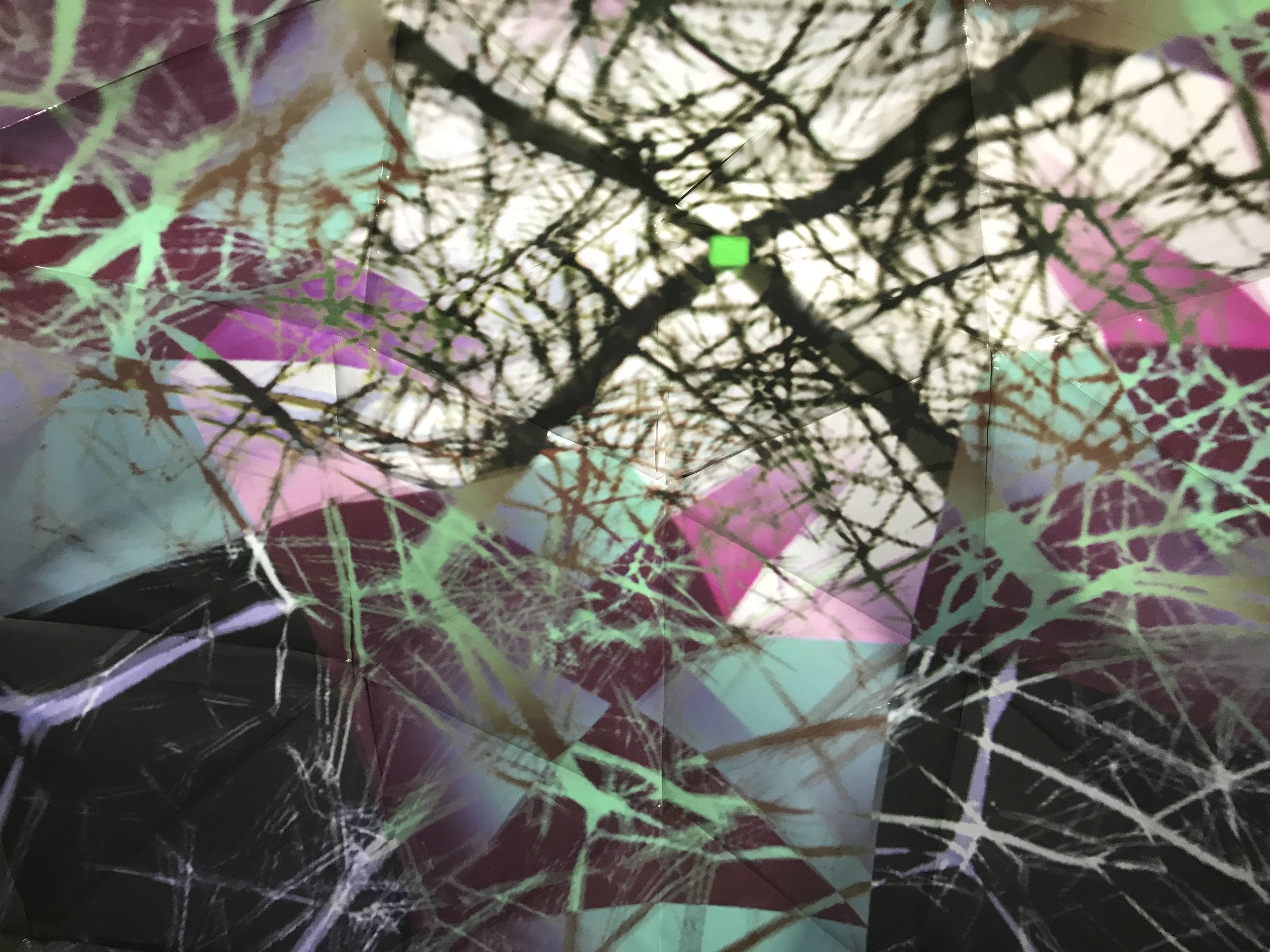

These are sketches and mood boards from my personal archives of works and prototypes to help conceptualise the idea of patterns, segmentation, and how to co-relate multiple-channel audio to multiple-level/degree/hierarchical visualisations. The data is also essential in shaping, augmenting and filtering different sonic and visual systems, thus synthesising new forms and possibilities.